11: Search and the Web#

This week we’ll return to a large directed information network: a snapshot of the Web. Download the Stanford Web Graph from the Stanford Large Network Dataset Collection, which is also available on the Datasets page in the textbook. (You may already have this from your Directed Network homework.) In this dataset, nodes represent pages and edges represent hyperlinks between those pages.

Keep in mind that this network has a huge number of nodes and edges; it’s far larger than any network we’ve used so far. Some of your code might take a little longer to run than usual, but if it’s taking more than 5 minutes or so there may be something else wrong. Also, you do not need to unzip the txt.gz file. You can read the file directly without unzipping it, and that will save space on your computer.

In a short Jupyter notebook report, answer the following questions about this network. Don’t simply calculate the answers: make sure you’re fully explaining (in writing) the metrics and visualizations that you generate. Consider the Criteria for Good Reports as a guide. You can create markdown cells with section headers to separate the different sections of the report. Rather than number the report as if you’re answering distinct questions, use the questions as a guide to do some data storytelling, i.e. explain this network’s data in an organized way.

Using the Hubs and Authorities method outlined on pages 403 and 404 of Networks, Crowd, and Markets, create a function to calculate non-normalized hub and authority scores for every node. Run 5 iterations of this function, then add the resulting scores as node attributes and create a Pandas Dataframe to view the scores.

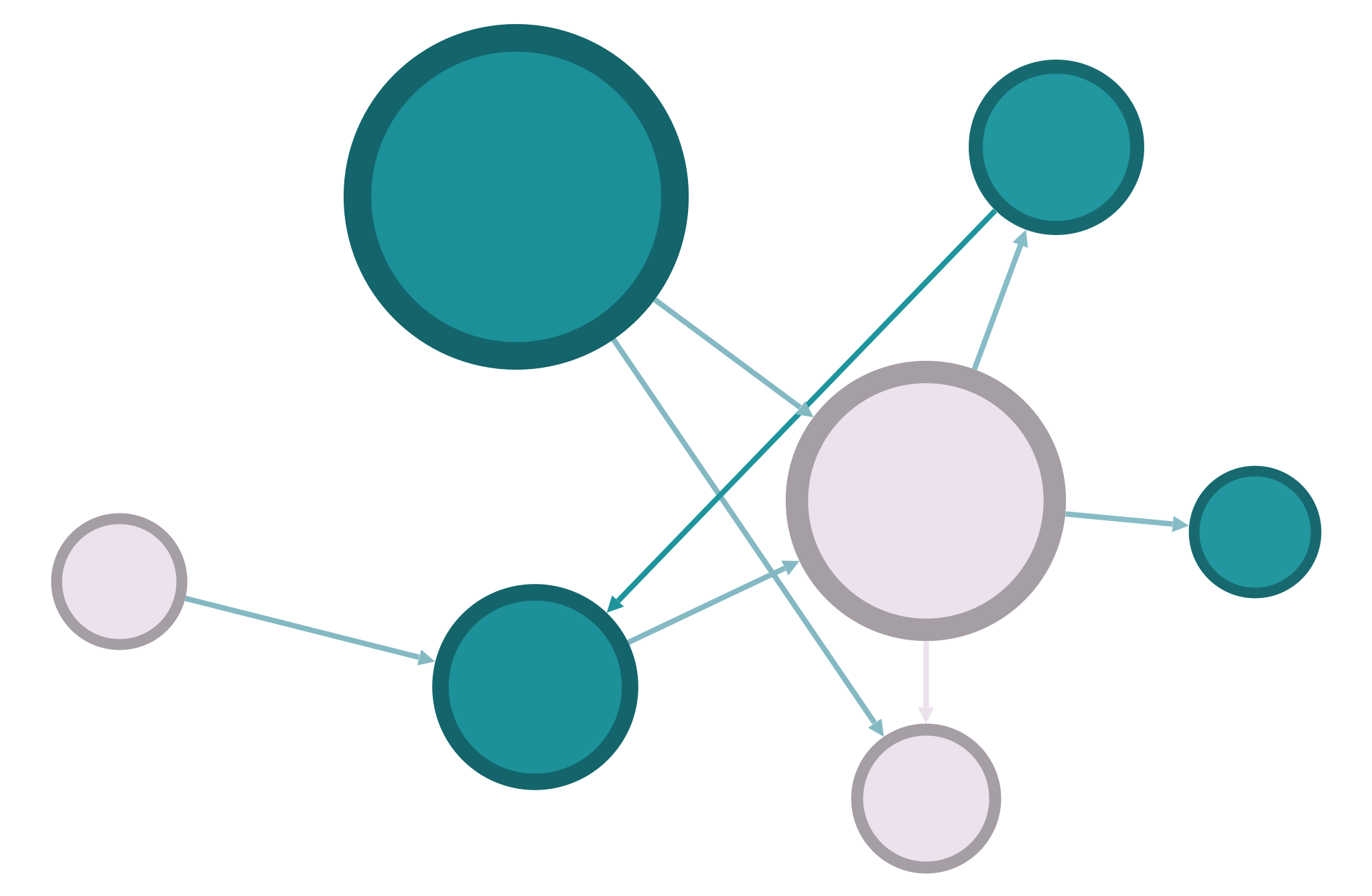

Export the network (with hub and authority scores) to Gephi and visualize both scores in the same graph. Which nodes seem to have high hub scores, and which have high authority scores? Do some have both? Discuss whether the results are surprising—would we expect the same nodes to have high scores as both hubs and authorities, or not?

Returning to Python, use the built-in NetworkX functions for PageRank and for HITS hubs and authorities, and add all three of these scores as node attributes and as columns in your dataframe. Besides normalization, what do you notice about the differences between these scores and the ones you calculated? (You’ll need to pay attention to Python’s scientific notation for numbers.) Explain the difference between PageRank and HITS, and say a little bit about why these measures might differ from your implementation.

Important notes:

You may need to refer back to our directed graph exercises to remember how to import a DiGraph object correctly.

Two NetworkX Directed Graph methods,

.successors()and.predecessors()will be helpful for calculating hub and authority scores. The first gives you the nodes that point to your node, and the second gives you the nodes that your node points to.NetworkX’s HITS implementation takes a dictionary for a starting value for every node (the

nstartparameter). In our case, the starting value should be 1.0 for every node.

When you’re finished, remove these instructions from the top of the file, leaving only your own writing and code. Export the notebook as an HTML file, check to make sure everything is formatted correctly, and submit your HTML file to Sakai.